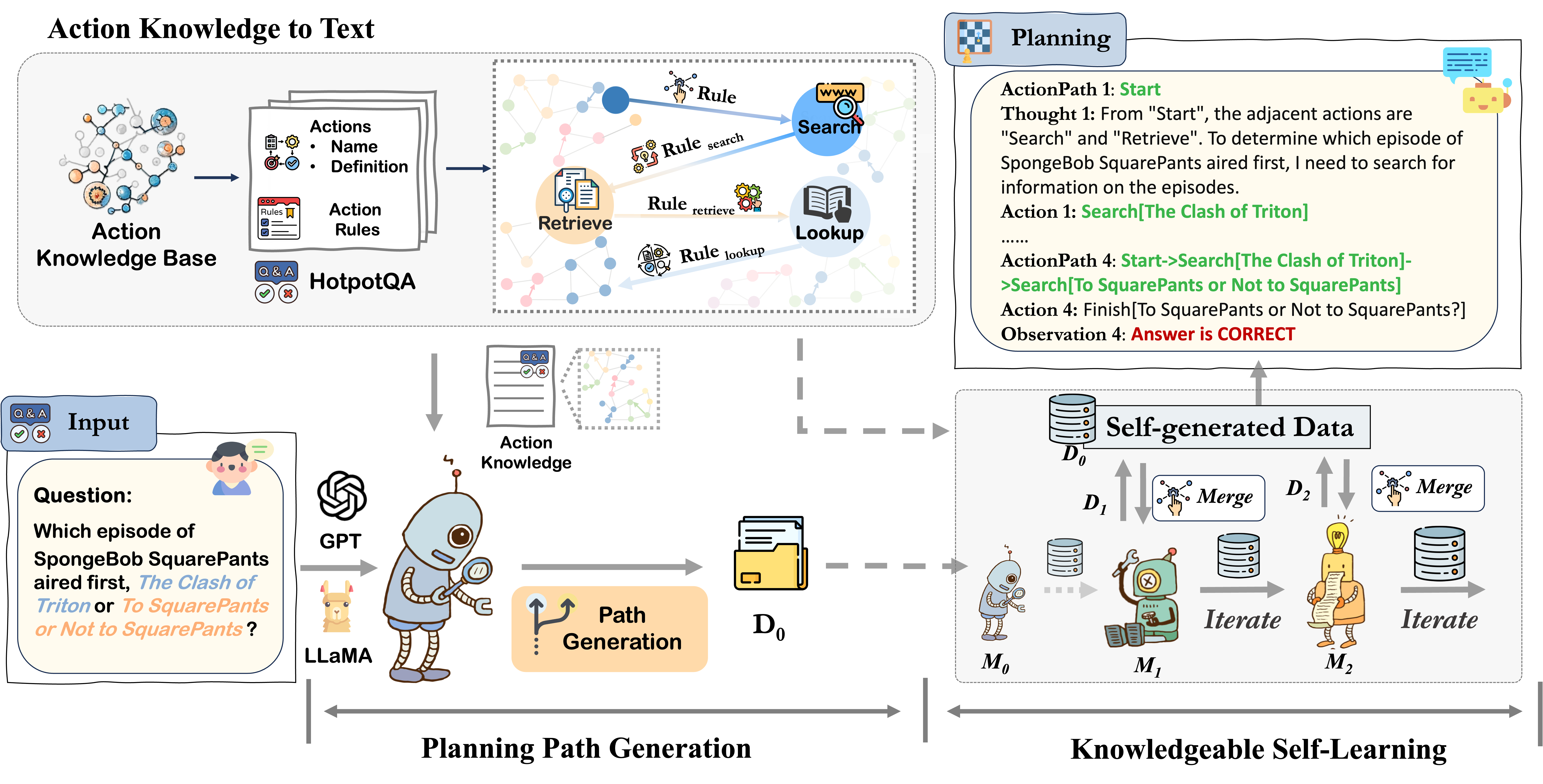

Large Language Models (LLMs) have demonstrated great potential in complex reasoning tasks, yet they fall short when tackling more sophisticated challenges, especially when interacting with environments through generating executable actions. This inadequacy primarily stems from the lack of built-in action knowledge in language agents, which fails to effectively guide the planning trajectories during task solving and results in planning hallucination. To address this issue, we introduce KnowAgent, a novel approach designed to enhance the planning capabilities of LLMs by incorporating explicit action knowledge. Specifically, KnowAgent employs an action knowledge base and a knowledgeable self-learning strategy to constrain the action path during planning, enabling more reasonable trajectory synthesis, and thereby enhancing the planning performance of language agents. Experimental results on HotpotQA and ALFWorld based on various backbone models demonstrate that KnowAgent can achieve comparable or superior performance to existing baselines. Further analysis indicates the effectiveness of KnowAgent in terms of planning hallucinations mitigation.

Figure 1: The overview of our proposed framework KnowAgent.

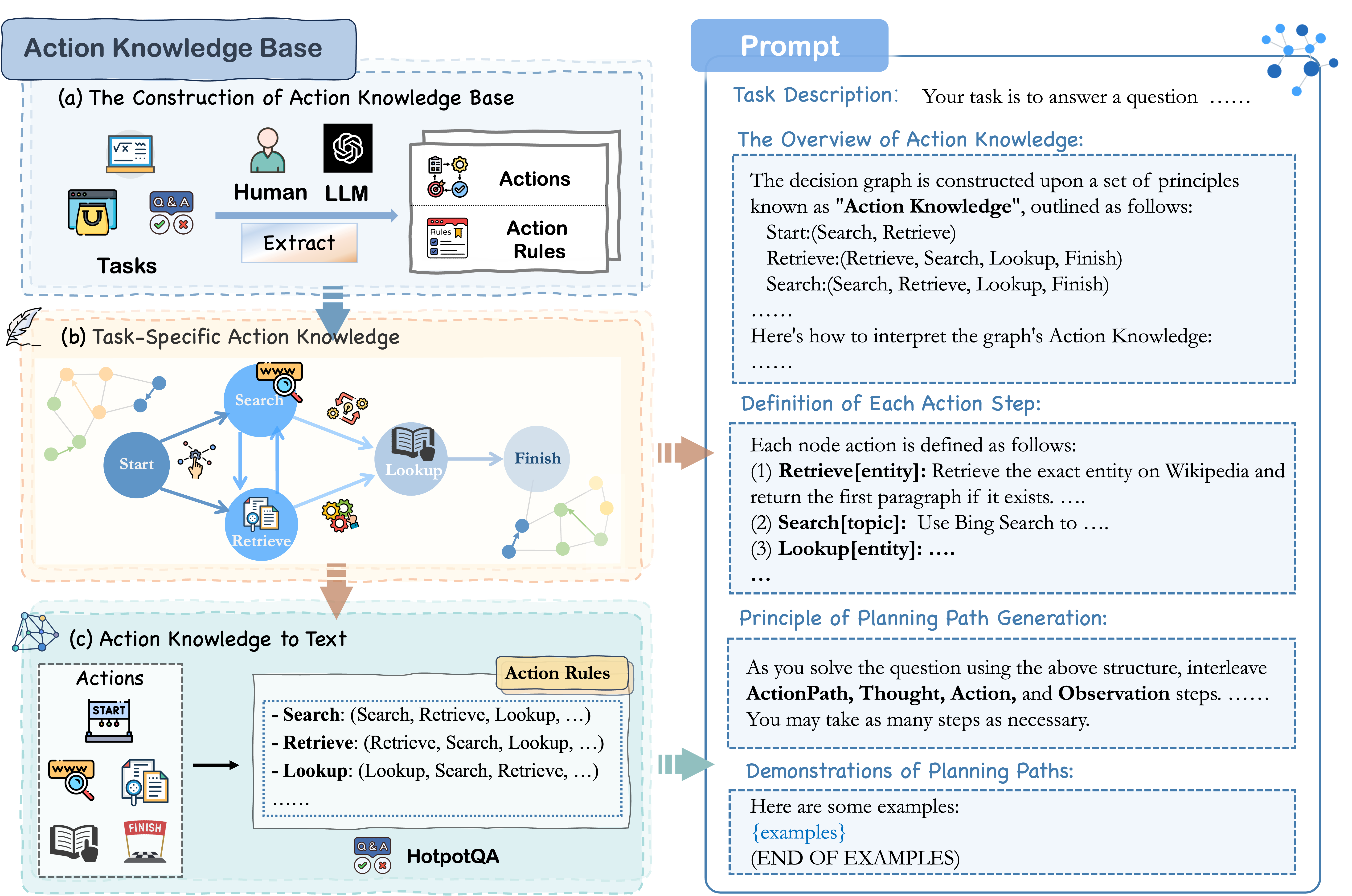

Figure 2: The Path Generation process of KnowAgent..

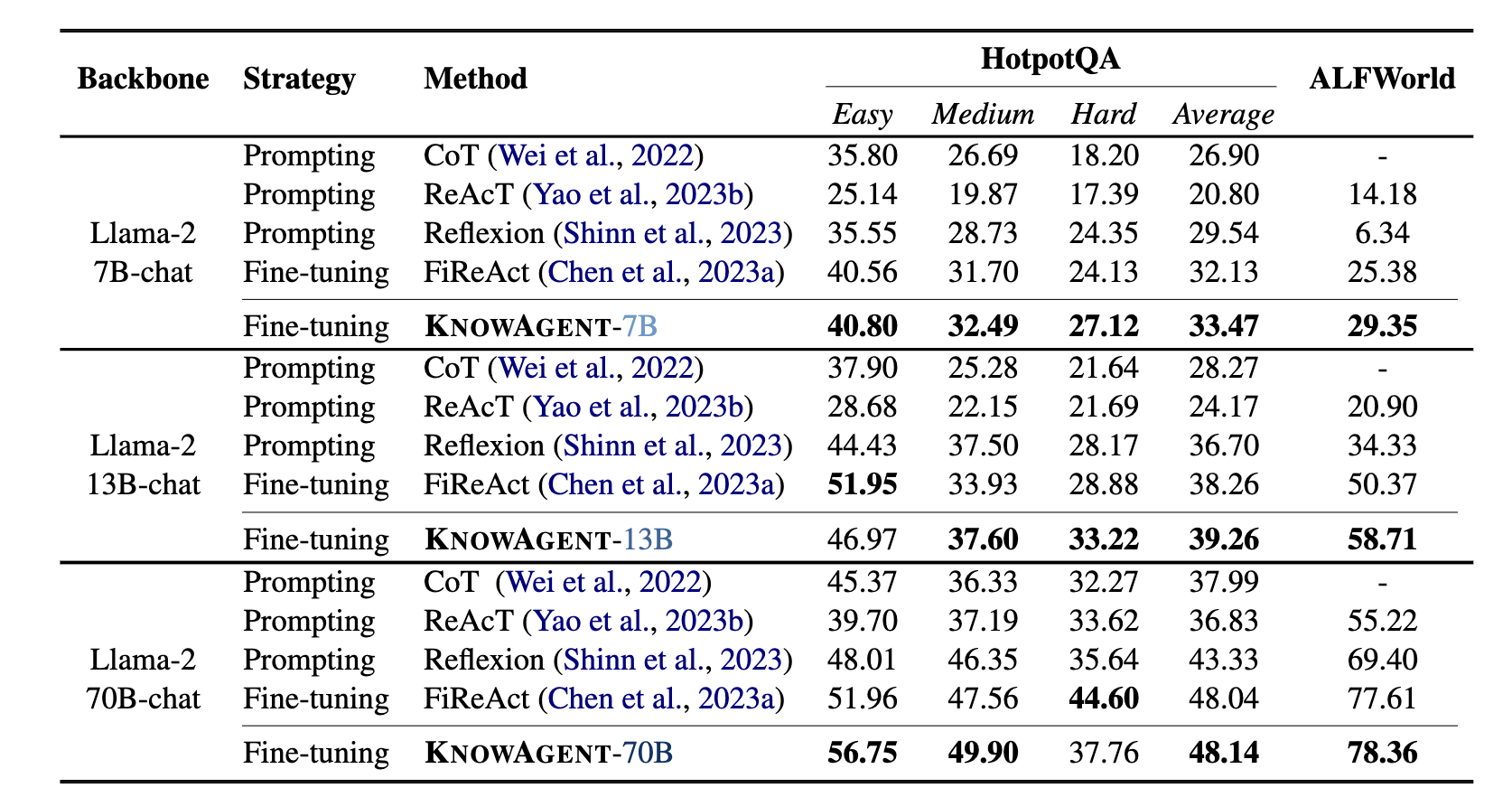

Table 1: Overall performance of KnowAgent on HotpotQA and ALFWorld. The evaluation metrics are F1 Score (\%) and Success Rate (\%), respectively. Strategy means the agent learning paradigm behind each method. The best results of each backbone are marked in bold.

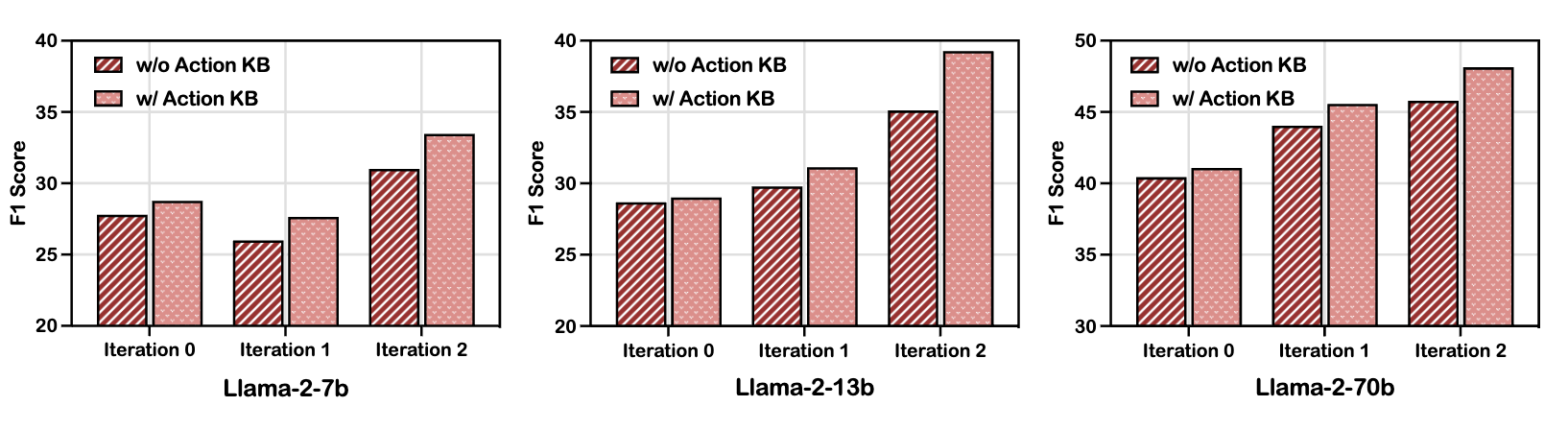

Figure 3: Ablation study on Action Knowledge within Llama-2 Models on HotpotQA. Here w/ Action KB indicates the naive KnowAgent and w/o Action KB symbolizes removing the action knowledge of the specific task.

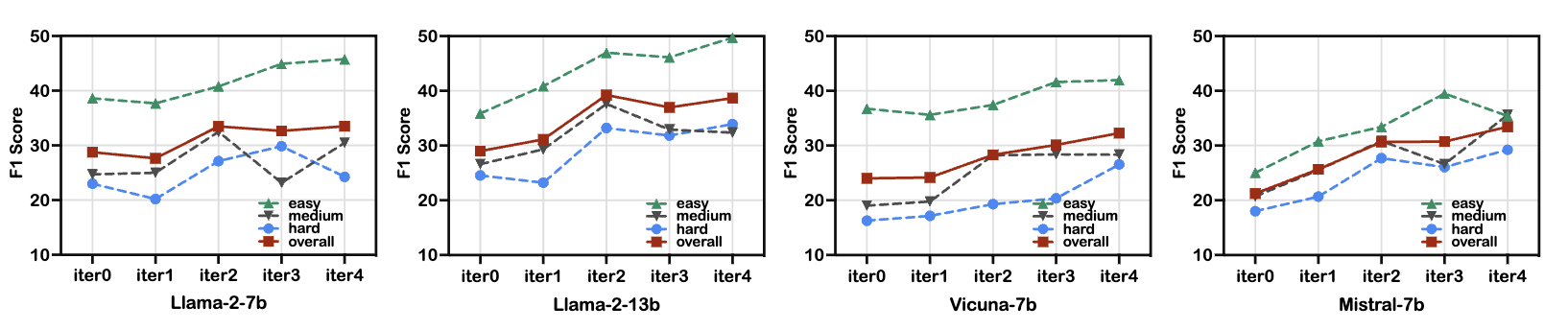

Figure 4: Ablation study on Knowledgeable Self-Learning iteration. We examine the influence of self-learning iterations on a selection of models, including Llama-2-7b, Llama-2-13b, Vicuna-7b, Mistral-7b. Here Iter0 represents baseline performance prior to any training.

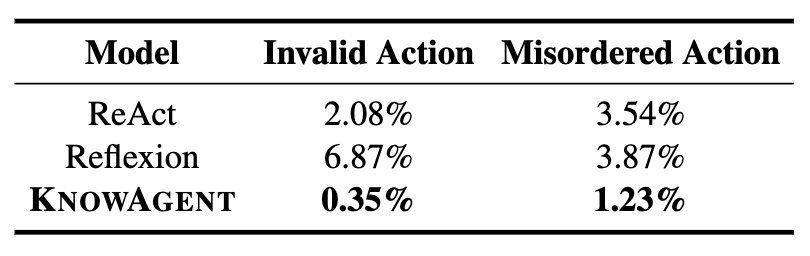

Table 2: Unreasonable action rates on HotpotQA with Llama-2-13b. Here invalid refers to actions that do not meet the action rule, while misordered means discrepancies in the logical sequence of actions

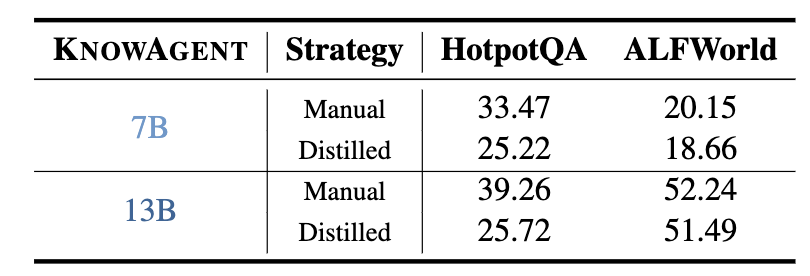

Table 3: Comparative Experiment on Manual vs. Distilled Action Knowledge. Manual stands for human-crafted knowledge and Distilled represents the distilled knowledge from GPT-4.

@article{zhu2024knowagent,

title={KnowAgent: Knowledge-Augmented Planning for LLM-Based Agents},

author={Zhu, Yuqi and Qiao, Shuofei and Ou, Yixin and Deng, Shumin and Zhang, Ningyu and Lyu, Shiwei and Shen, Yue and Liang, Lei and Gu, Jinjie and Chen, Huajun},

journal={arXiv preprint arXiv:2403.03101},

year={2024}

}

This website is adapted from Nerfies, licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.